In the wake of motorcycle safety being reported at an all-time low, we’ve covered quite a bit about what safe riding means for the two-wheeled industry.

These concerns are a part of the latter of two different schools of thought:

- That today’s roads aren’t yet ready for automated driving

- That today’s roads are ready for automated driving, provided technology can create safety precautions that outweigh the dangers of autopilot malfunctions for all on the road

In the latter party sits Honda, who has released the World’s First “Intelligent Driver-Assistive Technology” with plans to show off zero fatalities by 2050, alongside other bike brands with similar goals.

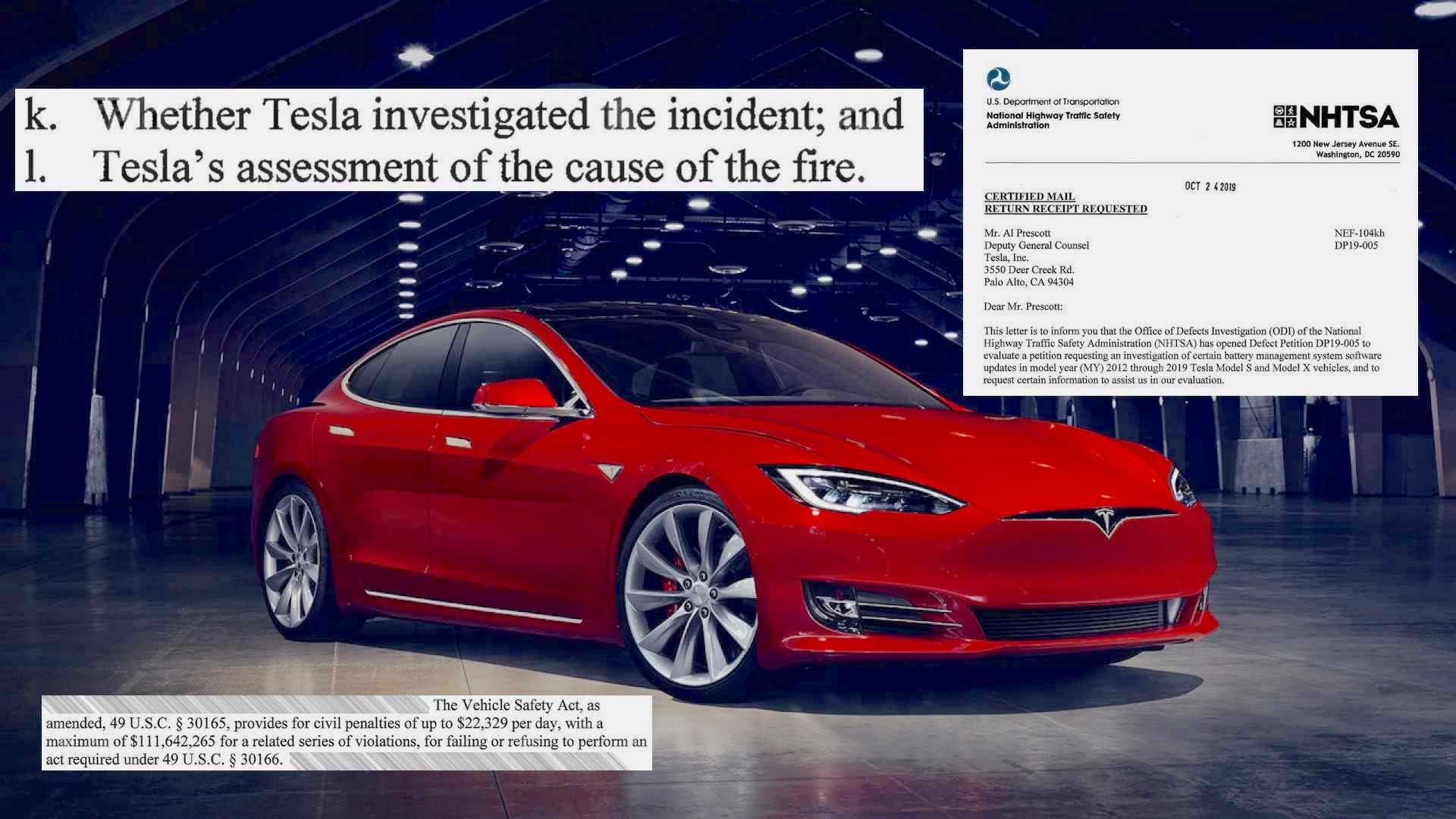

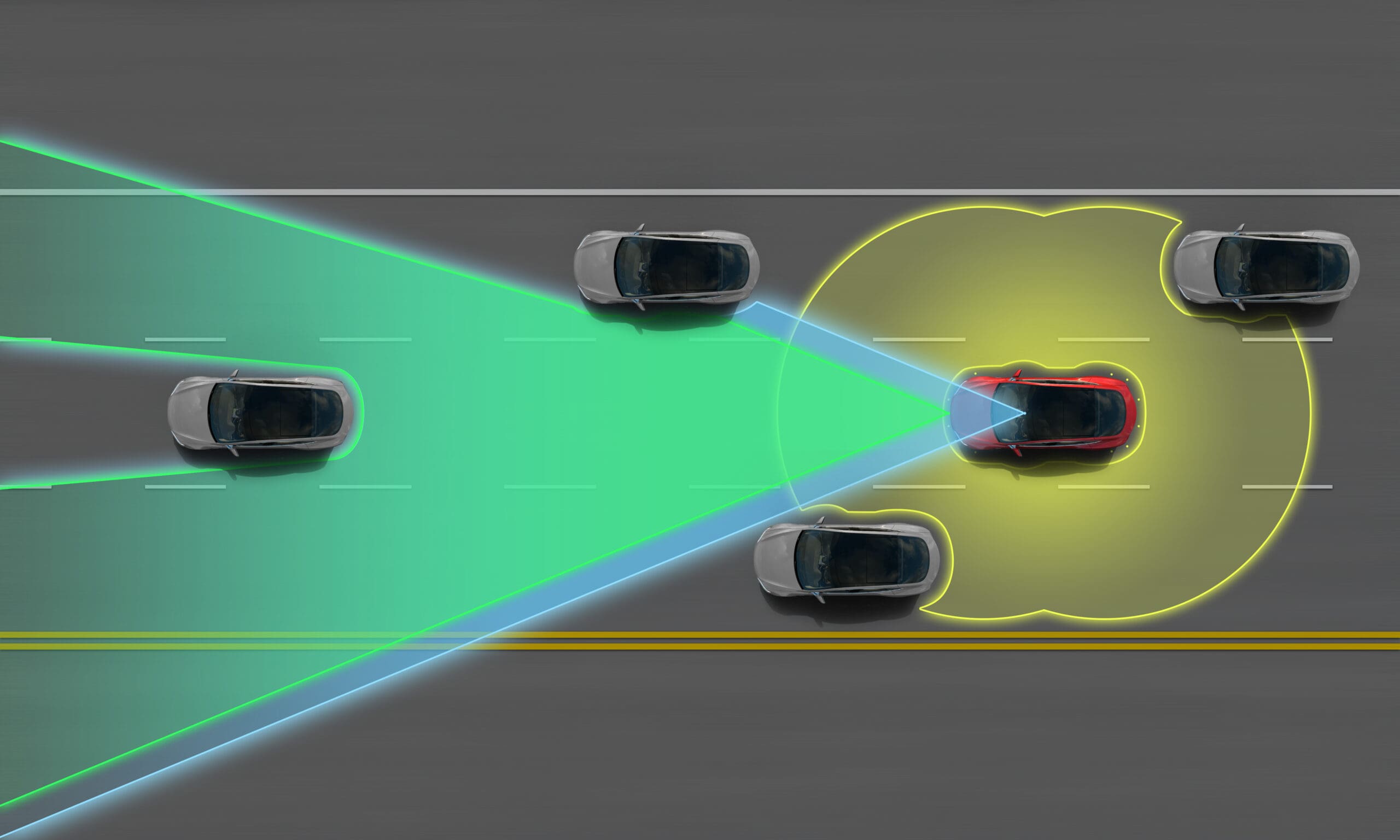

Automotive company Tesla’s also in the latter category, and her machines are out and about on today’s roads with their own Autopilot systems – systems that are being called into question, and with good reason.

According to ABCNews, the NHSTA is currently investigating two crashes involving Teslas and motorcyclists. In both situations, the riders were killed.

Concern as to the ‘auto’ state of Tesla’s Autopilot system has resulted in NHSTA’s teams being dispatched to look into the potential hazard.

“The agency suspects that Tesla’s partially automated driver-assist system was in use in each,” states the report.

“The first crash…a white Tesla Model Y SUV was traveling east in the high occupancy vehicle lane. Ahead of it was a rider on a green Yamaha V-Star motorcycle…”

“At some point, the vehicles collided, and the unidentified motorcyclist was ejected from the Yamaha. He was pronounced dead at the scene…whether or not the Tesla was operating on Autopilot remains under investigation.”

The accidents have purportedly been added by the NHSTA to a thick file folder that includes ‘over 750 complaints that Teslas can brake for no reason,’ as well as issues with ‘Teslas striking emergency vehicles parked along freeways.’

“The second crash …a Tesla Model 3 sedan was behind a Harley-Davidson motorcycle…the driver of the Tesla did not see the motorcyclist and collided with the back of the motorcycle, which threw the rider from the bike…Landon Embry, 34, of Orem, Utah, died at the scene.”

“The Tesla driver told authorities that he had the vehicle’s Autopilot setting on.”

In reaction to the mayhem, the acting Executive Director of the Nonprofit Center for Auto Safety has requested NHSTA to recall Tesla’s Autopilot.

“It is not recognizing motorcyclists, emergency vehicles, or pedestrians.”

“It’s pretty clear to me (and it should be to a lot of Tesla owners by now), this stuff isn’t working properly, and it’s not going to live up to the expectations, and it is putting innocent people in danger on the roads,” Brooks adds.

So how safe are Teslas?

The Department of Transportation on NYTimes states that Tesla Autopilot systems are considered to be twice as safe when used on the highway, with the statistics for Tesla accidents sitting a decent percentage (when in the general collisions category), with the maker herself stating that ‘one accident [happens] for every 2.42 million miles driven.’

Since both the above accidents happened on highways (Interstate 15 near Draper and State Route 91 in Riverside, respectively), the concern will be at the top of NHSTA’s docket for the coming season.

We will keep you up-to-date on the results of NHSTA’s investigation as the information comes down the pipeline; for other related news, be sure to check back at our shiny new webpage, and stay informed by subscribing to our newsletter.

Which school of thought do you best identify with?

Drop a comment below (we love hearing from you), and as ever – stay safe on the twisties.